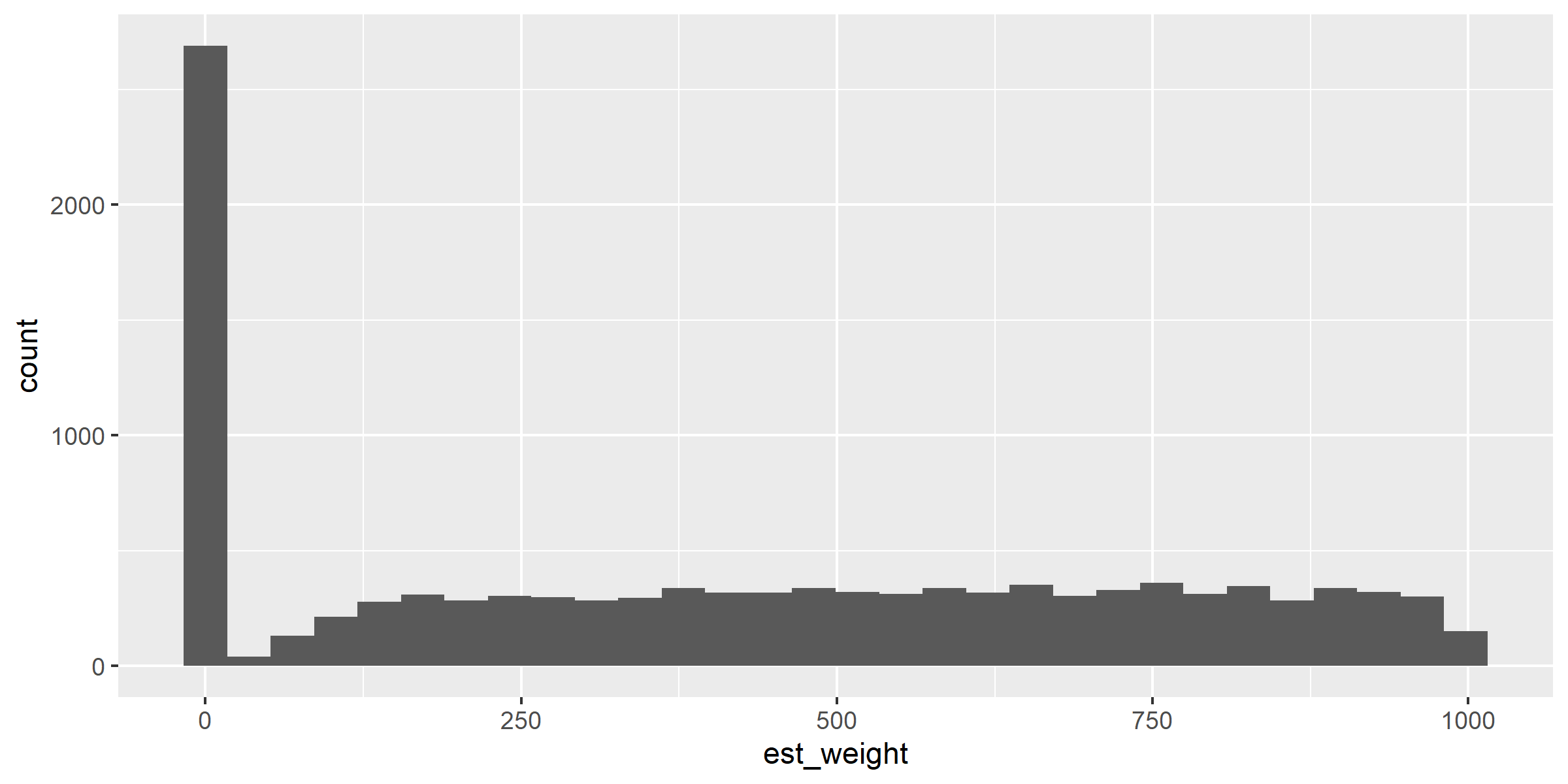

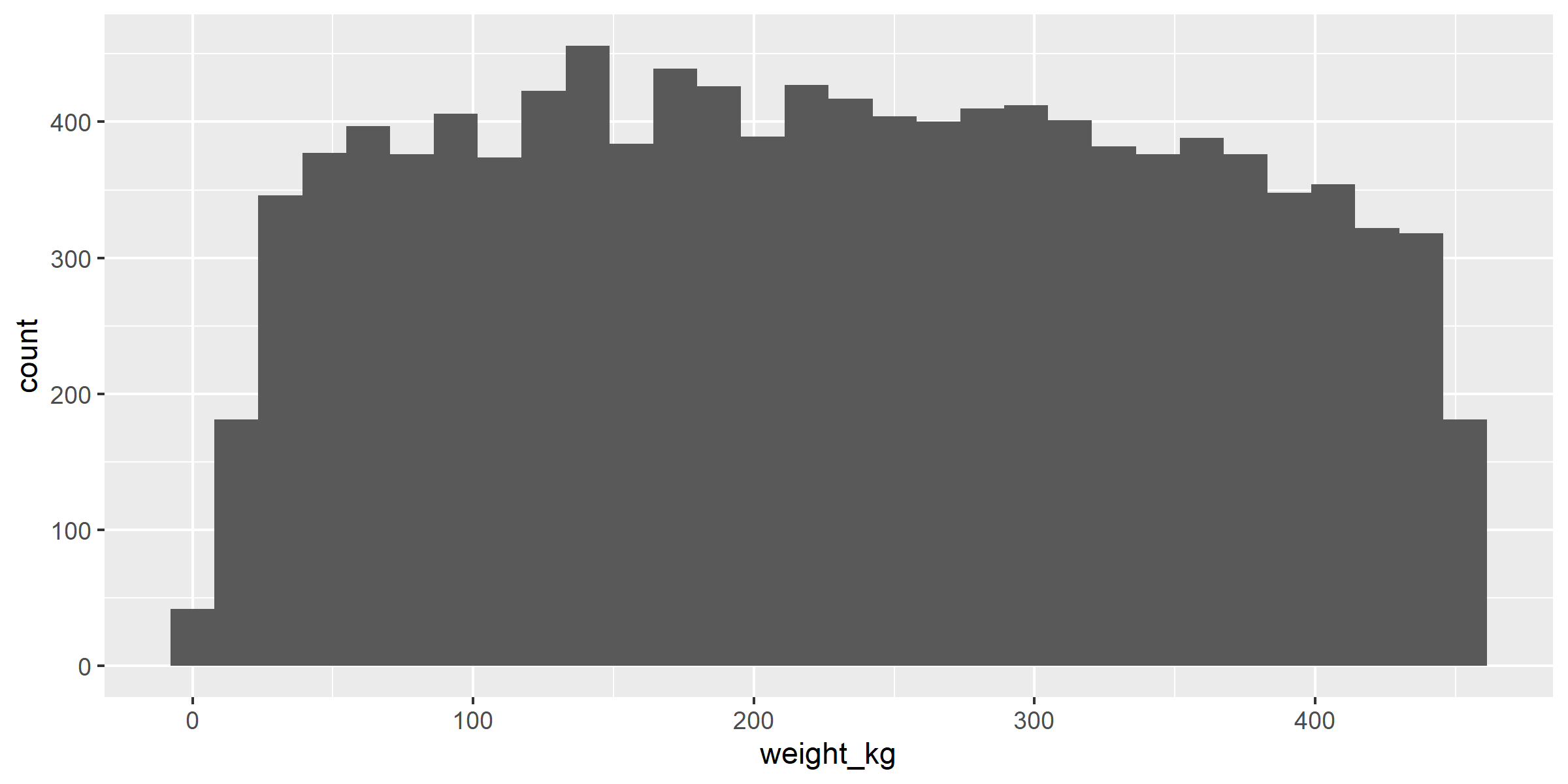

class: center, middle, hide-logo <style type="text/css"> pre { background: #F8F8F8; max-width: 100%; overflow-x: scroll; } </style> <style type="text/css"> .center2 { margin: 0; position: absolute; top: 50%; left: 50%; -ms-transform: translate(-50%, -50%); transform: translate(-50%, -50%); } </style> <style type="text/css"> .scroll-output { height: 80%; overflow-y: scroll; } </style> # Introduction to Tidymodels ## by <img src="GraphicsSlides/Logo RUG hell.png" width="50%" /> ##### Author/Presenter: Mathias Steilen ##### Last updated: _2022-11-15 13:06:11_ --- # Tidymodels <br> .center[ <img src="GraphicsSlides/tidymodels.png" width="100%" /> _[(Source)](https://www.tidymodels.org/)_ ] --- # Goals for this session - Know how to model with `tidymodels` by working live on a data set - Be able to use that knowledge and the code to apply it to other data and with other models <br> .center[ <img src="GraphicsSlides/to do.png" width="50%" /> ] --- # Today's data set .center[ <img src="GraphicsSlides/pumpkin.jpg" width="50%" /> ] > The Great Pumpkin Commonwealth's (GPC) mission cultivates the hobby of growing giant pumpkins throughout the world by establishing standards and regulations that ensure quality of fruit, fairness of competition, recognition of achievement, fellowship and education for all participating growers and weigh-off sites. ([TidyTuesday Link](https://github.com/rfordatascience/tidytuesday/blob/master/data/2021/2021-10-19/readme.md)) --- # Off we go ```r pumpkins <- readr::read_csv('https://raw.githubusercontent.com/rfordatascience/tidytuesday/master/data/2021/2021-10-19/pumpkins.csv') ``` ``` ## Rows: 28065 Columns: 14 ## ── Column specification ──────────────────────────────────────────────────────── ## Delimiter: "," ## chr (14): id, place, weight_lbs, grower_name, city, state_prov, country, gpc... ## ## ℹ Use `spec()` to retrieve the full column specification for this data. ## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message. ``` ```r library(tidyverse) library(tidymodels) library(doParallel) library(vip) ``` --- ### Inspecting the data ```r glimpse(pumpkins) ``` ``` ## Rows: 28,065 ## Columns: 14 ## $ id <chr> "2013-F", "2013-F", "2013-F", "2013-F", "2013-F", "2… ## $ place <chr> "1", "2", "3", "4", "5", "5", "7", "8", "9", "10", "… ## $ weight_lbs <chr> "154.50", "146.50", "145.00", "140.80", "139.00", "1… ## $ grower_name <chr> "Ellenbecker, Todd & Sequoia", "Razo, Steve", "Ellen… ## $ city <chr> "Gleason", "New Middletown", "Glenson", "Combined Lo… ## $ state_prov <chr> "Wisconsin", "Ohio", "Wisconsin", "Wisconsin", "Wisc… ## $ country <chr> "United States", "United States", "United States", "… ## $ gpc_site <chr> "Nekoosa Giant Pumpkin Fest", "Ohio Valley Giant Pum… ## $ seed_mother <chr> "209 Werner", "150.5 Snyder", "209 Werner", "109 Mar… ## $ pollinator_father <chr> "Self", NA, "103 Mackinnon", "209 Werner '12", "open… ## $ ott <chr> "184.0", "194.0", "177.0", "194.0", "0.0", "190.0", … ## $ est_weight <chr> "129.00", "151.00", "115.00", "151.00", "0.00", "141… ## $ pct_chart <chr> "20.0", "-3.0", "26.0", "-7.0", "0.0", "-1.0", "-4.0… ## $ variety <chr> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, … ``` The target and many other numeric values are still categorical. --- ### Recoding variables .scroll-output[ ```r pumpkins <- pumpkins %>% mutate(across(c(place, weight_lbs, ott, est_weight, pct_chart), as.numeric)) glimpse(pumpkins) ``` ``` ## Rows: 28,065 ## Columns: 14 ## $ id <chr> "2013-F", "2013-F", "2013-F", "2013-F", "2013-F", "2… ## $ place <dbl> 1, 2, 3, 4, 5, 5, 7, 8, 9, 10, 11, 12, NA, 13, 14, 1… ## $ weight_lbs <dbl> 154.5, 146.5, 145.0, 140.8, 139.0, 139.0, 136.5, 136… ## $ grower_name <chr> "Ellenbecker, Todd & Sequoia", "Razo, Steve", "Ellen… ## $ city <chr> "Gleason", "New Middletown", "Glenson", "Combined Lo… ## $ state_prov <chr> "Wisconsin", "Ohio", "Wisconsin", "Wisconsin", "Wisc… ## $ country <chr> "United States", "United States", "United States", "… ## $ gpc_site <chr> "Nekoosa Giant Pumpkin Fest", "Ohio Valley Giant Pum… ## $ seed_mother <chr> "209 Werner", "150.5 Snyder", "209 Werner", "109 Mar… ## $ pollinator_father <chr> "Self", NA, "103 Mackinnon", "209 Werner '12", "open… ## $ ott <dbl> 184, 194, 177, 194, 0, 190, 190, 182, 0, 0, 0, 177, … ## $ est_weight <dbl> 129, 151, 115, 151, 0, 141, 142, 124, 0, 0, 0, 115, … ## $ pct_chart <dbl> 20, -3, 26, -7, 0, -1, -4, 10, 0, 0, 0, 14, 4, 8, 14… ## $ variety <chr> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, … ``` ] --- ### id ```r pumpkins %>% * separate(id, sep = "-", into = c("year", "type")) %>% count(type) ``` ``` ## # A tibble: 6 × 2 ## type n ## <chr> <int> ## 1 F 2756 ## 2 L 1965 ## 3 P 15965 ## 4 S 1686 ## 5 T 3166 ## 6 W 2527 ``` The documentation on GitHub states: > Types: F = "Field Pumpkin", P = "Giant Pumpkin", S = "Giant Squash", W = "Giant Watermelon", L = "Long Gourd" (length in inches, not weight in pounds), T = Tomato --- ### id ```r pumpkins <- pumpkins %>% separate(id, sep = "-", into = c("year", "type")) %>% * mutate(type = case_when( * type == "F" ~ "Field Pumpkin", * type == "P" ~ "Giant Pumpkin", * type == "S" ~ "Giant Squash", * type == "W" ~ "Giant Watermelon", * type == "L" ~ "Long Gourd", * type == "T" ~ "Tomato" * )) ``` --- ### id ```r pumpkins %>% count(type, sort = T) ``` ``` ## # A tibble: 6 × 2 ## type n ## <chr> <int> ## 1 Giant Pumpkin 15965 ## 2 Tomato 3166 ## 3 Field Pumpkin 2756 ## 4 Giant Watermelon 2527 ## 5 Long Gourd 1965 ## 6 Giant Squash 1686 ``` --- ### id For the purpose of this session, let's focus on predicting giant pumpkins' weights. ```r pumpkins <- pumpkins %>% filter(type %in% c("Giant Pumpkin")) ``` ```r pumpkins %>% count(type, sort = T) ``` ``` ## # A tibble: 1 × 2 ## type n ## <chr> <int> ## 1 Giant Pumpkin 15965 ``` --- ### grower_name ```r pumpkins %>% count(grower_name, sort = T) ``` ``` ## # A tibble: 6,508 × 2 ## grower_name n ## <chr> <int> ## 1 Platte, Joe 47 ## 2 Sherwood, Jim 42 ## 3 Gansert, Norman 41 ## 4 Wolf, Andy 41 ## 5 Miller, Gary 38 ## 6 Karkos, Udo 34 ## 7 Melka, Friedrich 34 ## 8 Werner, Quinn 33 ## 9 Tobeck, Cindy 32 ## 10 Stelts, Dave & Carol 31 ## # … with 6,498 more rows ``` Some recurring names, less frequent should be lumped together. --- ### city ```r pumpkins %>% count(city, sort = T) ``` ``` ## # A tibble: 2,737 × 2 ## city n ## <chr> <int> ## 1 <NA> 1569 ## 2 Napa 152 ## 3 Barnesville 93 ## 4 Kasterlee 90 ## 5 Paredes 73 ## 6 Gentilly 68 ## 7 Olympia 65 ## 8 Auburn 64 ## 9 Johnston 62 ## 10 Kaukauna 60 ## # … with 2,727 more rows ``` Same again. --- ### state_prov ```r pumpkins %>% count(state_prov, sort = T) ``` ``` ## # A tibble: 134 × 2 ## state_prov n ## <chr> <int> ## 1 Other 1549 ## 2 Wisconsin 1031 ## 3 California 991 ## 4 Ontario 836 ## 5 Ohio 713 ## 6 Washington 712 ## 7 Michigan 633 ## 8 Pennsylvania 540 ## 9 New York 505 ## 10 Oregon 464 ## # … with 124 more rows ``` Again. --- ### country ```r pumpkins %>% count(country, sort = T) ``` ``` ## # A tibble: 30 × 2 ## country n ## <chr> <int> ## 1 United States 9902 ## 2 Canada 2163 ## 3 Germany 1144 ## 4 Italy 512 ## 5 Austria 390 ## 6 Japan 333 ## 7 Belgium 291 ## 8 United Kingdom 179 ## 9 Spain 173 ## 10 Slovenia 158 ## # … with 20 more rows ``` Not that bad this time, likely fewer are enough. --- ### gpc_site ```r pumpkins %>% count(gpc_site, sort = T) ``` ``` ## # A tibble: 173 × 2 ## gpc_site n ## <chr> <int> ## 1 Wiegemeisterschaft Berlin/Brandenburg 453 ## 2 Ohio Valley Giant Pumpkin Growers Weigh-off 376 ## 3 Elk Grove Giant Pumpkin Festival 359 ## 4 Nippon Ichi Dodekabocha Taikai 333 ## 5 Baumans Farm Giant Pumpkin Weigh-off 307 ## 6 Stillwater Harvestfest 298 ## 7 Austrian Weigh-off 285 ## 8 Central Great Lakes Weigh-off 271 ## 9 Safeway World Championship Pumpkin Weigh-Off 270 ## 10 Nekoosa Giant Pumpkin Fest 263 ## # … with 163 more rows ``` Again. --- ### seed_mother ```r pumpkins %>% count(seed_mother, sort = T) ``` ``` ## # A tibble: 6,009 × 2 ## seed_mother n ## <chr> <int> ## 1 <NA> 4424 ## 2 unknown 169 ## 3 Unknown 145 ## 4 2145 McMullen 122 ## 5 2009 Wallace 104 ## 6 1985 Miller 89 ## 7 1911 Urena 72 ## 8 2363 Holland 61 ## 9 2008 Neptune 60 ## 10 1495 Stelts 52 ## # … with 5,999 more rows ``` Way too many levels, also likely not useful if seeds cannot be used in the future. --- ### pollinator_father ```r pumpkins %>% count(pollinator_father, sort = T) ``` ``` ## # A tibble: 3,465 × 2 ## pollinator_father n ## <chr> <int> ## 1 <NA> 5160 ## 2 self 1127 ## 3 Self 1101 ## 4 open 773 ## 5 Open 665 ## 6 2009 Wallace 143 ## 7 2145 McMullen 124 ## 8 unknown 82 ## 9 Unknown 77 ## 10 1985 Miller 68 ## # … with 3,455 more rows ``` Again, likely not useful. --- ### ott (over-the-top inches) <!-- --> Zeros? Likely the same as missing values, only not encoded as `NA`. --- ### ott (over-the-top inches) Also, let's make that metric (cm). ```r pumpkins <- pumpkins %>% mutate(ott = ott*2.54) ``` .center[ <img src="GraphicsSlides/download.png" width="40%" /> ] --- ### est_weight <!-- --> Zeros? Same as with `ott`, likely need to drop them. --- ### est_weight Make that metric as well (kg). ```r pumpkins <- pumpkins %>% mutate(est_weight = est_weight/2.205) ``` The target as well: ```r pumpkins <- pumpkins %>% mutate(weight_lbs = weight_lbs/2.205) %>% rename(weight_kg = weight_lbs) ``` --- ### weight_kg (Target) <!-- --> --- ### variety ```r pumpkins %>% count(variety, sort = T) ``` ``` ## # A tibble: 1 × 2 ## variety n ## <chr> <int> ## 1 <NA> 15965 ``` It's clear what to do with this one. --- ### Looking for missing values ```r *colMeans(is.na(pumpkins)) %>% tidy() %>% arrange(x) %>% rename(pct = x) %>% filter(pct > 0) %>% arrange(-pct) ``` ``` ## # A tibble: 9 × 2 ## names pct ## <chr> <dbl> ## 1 variety 1 ## 2 pollinator_father 0.323 ## 3 weight_kg 0.315 ## 4 est_weight 0.304 ## 5 seed_mother 0.277 ## 6 city 0.0983 ## 7 place 0.0857 ## 8 ott 0.000564 ## 9 pct_chart 0.000564 ``` --- ### Dealing with `NA` and zeros ```r pumpkins <- pumpkins %>% # remove vars with too many missing values or uninteresting select(-c(variety, pollinator_father, seed_mother, pct_chart)) %>% # remove obs with target equal to zero, not possible # remove obs with target NA values filter(weight_kg > 0, !is.na(weight_kg)) %>% # replace zero values within predictors with NA values mutate(across(c(ott, est_weight), ~ ifelse(.x == 0, NA, .x))) ``` --- ### Dealing with `NA` and zeros ```r colMeans(is.na(pumpkins)) ``` ``` ## year type place weight_kg grower_name city ## 0.00000000 0.00000000 0.08744969 0.00000000 0.00000000 0.11553238 ## state_prov country gpc_site ott est_weight ## 0.00000000 0.00000000 0.00000000 0.22036224 0.25375046 ``` There are still some `NA` values, but these are manageable with imputation. --- ### Converting all character variables to factors ```r pumpkins <- pumpkins %>% mutate(across(where(is.character), as.factor)) pumpkins %>% head(5) ``` ``` ## # A tibble: 5 × 11 ## year type place weigh…¹ growe…² city state…³ country gpc_s…⁴ ott est_w…⁵ ## <fct> <fct> <dbl> <dbl> <fct> <fct> <fct> <fct> <fct> <dbl> <dbl> ## 1 2013 Giant… 356 453. Magari… Goff… New Ha… United… Deerfi… 940. NA ## 2 2013 Giant… 356 453. Leland… Canby Oregon United… Bauman… 904. 451. ## 3 2013 Giant… 358 453. Pappas… Mars… Massac… United… Deerfi… 917. NA ## 4 2013 Giant… 359 453. Northr… Suss… New Br… Canada Journe… 884. 423. ## 5 2013 Giant… 359 453. Schult… Deco… Iowa United… Ryan N… 892. 434. ## # … with abbreviated variable names ¹weight_kg, ²grower_name, ³state_prov, ## # ⁴gpc_site, ⁵est_weight ``` --- ### Let's get modelling! <br> <br> .center[ <img src="GraphicsSlides/get in loser.png" width="60%" /> ] --- ### Creating training and holdout splits ```r dt_split <- initial_split(pumpkins, strata = weight_kg) dt_split ``` ``` ## <Training/Testing/Total> ## <8198/2734/10932> ``` --- ### Creating training and holdout splits ```r dt_train <- training(dt_split) dt_test <- testing(dt_split) dim(dt_train) ``` ``` ## [1] 8198 11 ``` ```r dim(dt_test) ``` ``` ## [1] 2734 11 ``` ```r dt_split ``` ``` ## <Training/Testing/Total> ## <8198/2734/10932> ``` --- ### Cross-Validation for stable computation of model metrics ```r folds <- vfold_cv(dt_train, v = 5, strata = weight_kg) folds ``` ``` ## # 5-fold cross-validation using stratification ## # A tibble: 5 × 2 ## splits id ## <list> <chr> ## 1 <split [6557/1641]> Fold1 ## 2 <split [6558/1640]> Fold2 ## 3 <split [6559/1639]> Fold3 ## 4 <split [6559/1639]> Fold4 ## 5 <split [6559/1639]> Fold5 ``` When tuning hyperparameters, these folds can be passed into the tuning function. --- ### Preprocessing with `recipes` The recipe works similarly like the well known `lm(y ~ x)` function. You can add some variables... ```r xg_rec <- recipe(weight_kg ~ ott + est_weight + country, data = dt_train) xg_rec ``` ``` ## Recipe ## ## Inputs: ## ## role #variables ## outcome 1 ## predictor 3 ``` --- ### Preprocessing with `recipes` The recipe works similarly like the well known `lm(y ~ x)` function. You can add some variables... ...or all variables. ```r xg_rec <- recipe(weight_kg ~ ., data = dt_train) xg_rec ``` ``` ## Recipe ## ## Inputs: ## ## role #variables ## outcome 1 ## predictor 10 ``` --- ### Let's see what we still need to do ```r glimpse(pumpkins) ``` ``` ## Rows: 10,932 ## Columns: 11 ## $ year <fct> 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013, 2013… ## $ type <fct> Giant Pumpkin, Giant Pumpkin, Giant Pumpkin, Giant Pumpkin… ## $ place <dbl> 356, 356, 358, 359, 359, 361, 362, 363, 363, 363, 366, 366… ## $ weight_kg <dbl> 453.2880, 453.2880, 453.0612, 452.8345, 452.8345, 452.6077… ## $ grower_name <fct> "Magarian, Marc", "Leland, Neal", "Pappas, Ed", "Northrup,… ## $ city <fct> "Goffstown", "Canby", "Marshfield", "Sussex", "Decorah", "… ## $ state_prov <fct> New Hampshire, Oregon, Massachusetts, New Brunswick, Iowa,… ## $ country <fct> United States, United States, United States, Canada, Unite… ## $ gpc_site <fct> Deerfield Fair, Baumans Farm Giant Pumpkin Weigh-off, Deer… ## $ ott <dbl> 939.80, 904.24, 916.94, 883.92, 891.54, 916.94, 939.80, NA… ## $ est_weight <dbl> NA, 450.7937, NA, 423.1293, 433.5601, NA, NA, NA, 407.7098… ``` - Factors: allow for new factor levels - Remove: `type` - Lump together: `grower_name`, `city`, `state_prov`, `country`, `gpc_site` - Impute: variables with missing observations --- ### Preprocessing with `recipes` Removing type: ```r xg_rec <- recipe(weight_kg ~ ., data = dt_train) %>% * step_rm(type) xg_rec %>% prep() %>% juice() %>% head(5) ``` ``` ## # A tibble: 5 × 10 ## year place grower_name city state…¹ country gpc_s…² ott est_w…³ weigh…⁴ ## <fct> <dbl> <fct> <fct> <fct> <fct> <fct> <dbl> <dbl> <dbl> ## 1 2013 1229 Offermann, Ir… Koeln Other Germany XXL-Ku… 605. 137. 125. ## 2 2013 1230 Zimmermann, A… <NA> Other Austria Austri… NA NA 125. ## 3 2013 1231 Giovanni, Riva <NA> Other Italy Festa … 607. 139. 125. ## 4 2013 1232 Martel Junior… Beca… Quebec Canada Potiro… NA NA 125. ## 5 2013 1232 Strange, Mike Dorv… Quebec Canada Vermon… 574. 119. 125. ## # … with abbreviated variable names ¹state_prov, ²gpc_site, ³est_weight, ## # ⁴weight_kg ``` --- ### Preprocessing with `recipes` Imputing missing values with mode for categorical and median for numeric predictors: ```r xg_rec <- recipe(weight_kg ~ ., data = dt_train) %>% step_rm(type) %>% * step_impute_median(all_numeric_predictors()) %>% * step_impute_mode(all_nominal_predictors()) colMeans(is.na(xg_rec %>% prep() %>% juice())) ``` ``` ## year place grower_name city state_prov country ## 0 0 0 0 0 0 ## gpc_site ott est_weight weight_kg ## 0 0 0 0 ``` --- ### Preprocessing with `recipes` .scroll-output[ Lumping factor levels together. Because the value "Other" is already a level in the factors, we need to recode the newly added "other" to "Other" to make it the same category. ```r xg_rec <- recipe(weight_kg ~ ., data = dt_train) %>% step_rm(type) %>% step_impute_median(all_numeric_predictors()) %>% step_impute_mode(all_nominal_predictors()) %>% * step_other(grower_name, city, state_prov, * country, gpc_site, * threshold = 0.01) %>% * step_mutate(across(c(grower_name, city, state_prov, * country, gpc_site), * ~ forcats::fct_recode(., "Other" = "other"))) xg_rec %>% prep() %>% juice() %>% head() ``` ``` ## # A tibble: 6 × 10 ## year place grower_name city state_…¹ country gpc_s…² ott est_w…³ weigh…⁴ ## <fct> <dbl> <fct> <fct> <fct> <fct> <fct> <dbl> <dbl> <dbl> ## 1 2013 1229 Other Other Other Germany Other 605. 137. 125. ## 2 2013 1230 Other Paredes Other Austria Austri… 734. 243. 125. ## 3 2013 1231 Other Paredes Other Italy Other 607. 139. 125. ## 4 2013 1232 Other Other Quebec Canada Potiro… 734. 243. 125. ## 5 2013 1232 Other Other Quebec Canada Vermon… 574. 119. 125. ## 6 2013 1234 Other Other Other Germany Other 577. 120. 124. ## # … with abbreviated variable names ¹state_prov, ²gpc_site, ³est_weight, ## # ⁴weight_kg ``` ] --- ### Preprocessing with `recipes` .scroll-output[ Let's normalize the numerical predictors as well to see undistorted variable importance: ```r xg_rec <- recipe(weight_kg ~ ., data = dt_train) %>% step_rm(type) %>% step_impute_median(all_numeric_predictors()) %>% step_impute_mode(all_nominal_predictors()) %>% step_other(grower_name, city, state_prov, country, gpc_site, threshold = 0.01) %>% step_mutate(across(c(grower_name, city, state_prov, country, gpc_site), ~ forcats::fct_recode(., "Other" = "other"))) %>% * step_normalize(all_numeric_predictors()) xg_rec %>% prep() %>% juice() %>% head() ``` ``` ## # A tibble: 6 × 10 ## year place grower_name city state…¹ country gpc_s…² ott est_w…³ weigh…⁴ ## <fct> <dbl> <fct> <fct> <fct> <fct> <fct> <dbl> <dbl> <dbl> ## 1 2013 0.474 Other Other Other Germany Other -0.817 -1.04 125. ## 2 2013 0.477 Other Paredes Other Austria Austri… 0.156 0.0143 125. ## 3 2013 0.480 Other Paredes Other Italy Other -0.798 -1.03 125. ## 4 2013 0.483 Other Other Quebec Canada Potiro… 0.156 0.0143 125. ## 5 2013 0.483 Other Other Quebec Canada Vermon… -1.05 -1.23 125. ## 6 2013 0.489 Other Other Other Germany Other -1.03 -1.21 124. ## # … with abbreviated variable names ¹state_prov, ²gpc_site, ³est_weight, ## # ⁴weight_kg ``` ] --- ### Preprocessing with `recipes` Let's allow for novel factor levels in the new data and one-hot encode the categorical variables as well: ```r xg_rec <- recipe(weight_kg ~ ., data = dt_train) %>% step_rm(type) %>% step_impute_median(all_numeric_predictors()) %>% step_impute_mode(all_nominal_predictors()) %>% step_other(grower_name, city, state_prov, country, gpc_site, threshold = 0.01) %>% step_mutate(across(c(grower_name, city, state_prov, country, gpc_site), ~ forcats::fct_recode(., "Other" = "other"))) %>% step_normalize(all_numeric_predictors()) %>% * step_novel(all_nominal_predictors()) %>% * step_dummy(all_nominal_predictors(), one_hot = TRUE) ``` --- ### Preprocessing with `recipes` .scroll-output[ Prepping and baking will apply all recipe steps to the new data, i.e. the holdout used for evaluation at a later stage. ```r xg_rec %>% prep() %>% bake(dt_train) %>% head() ``` ``` ## # A tibble: 6 × 95 ## place ott est_wei…¹ weigh…² year_…³ year_…⁴ year_…⁵ year_…⁶ year_…⁷ year_…⁸ ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 0.474 -0.817 -1.04 125. 1 0 0 0 0 0 ## 2 0.477 0.156 0.0143 125. 1 0 0 0 0 0 ## 3 0.480 -0.798 -1.03 125. 1 0 0 0 0 0 ## 4 0.483 0.156 0.0143 125. 1 0 0 0 0 0 ## 5 0.483 -1.05 -1.23 125. 1 0 0 0 0 0 ## 6 0.489 -1.03 -1.21 124. 1 0 0 0 0 0 ## # … with 85 more variables: year_X2019 <dbl>, year_X2020 <dbl>, ## # year_X2021 <dbl>, year_new <dbl>, grower_name_Karkos..Udo <dbl>, ## # grower_name_Other <dbl>, grower_name_new <dbl>, city_Paredes <dbl>, ## # city_Other <dbl>, city_new <dbl>, state_prov_Alberta <dbl>, ## # state_prov_Antwerp <dbl>, state_prov_Brandenburg <dbl>, ## # state_prov_California <dbl>, state_prov_Colorado <dbl>, ## # state_prov_Connecticut <dbl>, state_prov_Indiana <dbl>, … ``` ] --- ### Preprocessing with `recipes` That's the recipe done! The recipe allows to - prevent data leakage - create stepwise data preprocessing that is easy to understand - no manual column indexing, subsetting, and whatever shenanigans Python requires you to do <br> <blockquote class="twitter-tweet"><p lang="en" dir="ltr">Silly complaint: "this tool keeps you from thinking." No one stops thinking when they use good tools. They think about more important things</p>— David Robinson (@drob) <a href="https://twitter.com/drob/status/769161059743756289?ref_src=twsrc%5Etfw">August 26, 2016</a></blockquote> <script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script> --- ### Setting up the model .scroll-output[ ```r xg_spec <- boost_tree( trees = 750, tree_depth = tune(), min_n = tune(), loss_reduction = tune(), sample_size = tune(), mtry = tune(), learn_rate = tune() ) %>% set_engine("xgboost", importance = "impurity") %>% set_mode("regression") xg_spec ``` ``` ## Boosted Tree Model Specification (regression) ## ## Main Arguments: ## mtry = tune() ## trees = 750 ## min_n = tune() ## tree_depth = tune() ## learn_rate = tune() ## loss_reduction = tune() ## sample_size = tune() ## ## Engine-Specific Arguments: ## importance = impurity ## ## Computational engine: xgboost ``` ] --- ### Setting up the workflow .scroll-output[ ```r xg_wflow <- workflow() %>% add_recipe(xg_rec) %>% add_model(xg_spec) xg_wflow ``` ``` ## ══ Workflow ════════════════════════════════════════════════════════════════════ ## Preprocessor: Recipe ## Model: boost_tree() ## ## ── Preprocessor ──────────────────────────────────────────────────────────────── ## 8 Recipe Steps ## ## • step_rm() ## • step_impute_median() ## • step_impute_mode() ## • step_other() ## • step_mutate() ## • step_normalize() ## • step_novel() ## • step_dummy() ## ## ── Model ─────────────────────────────────────────────────────────────────────── ## Boosted Tree Model Specification (regression) ## ## Main Arguments: ## mtry = tune() ## trees = 750 ## min_n = tune() ## tree_depth = tune() ## learn_rate = tune() ## loss_reduction = tune() ## sample_size = tune() ## ## Engine-Specific Arguments: ## importance = impurity ## ## Computational engine: xgboost ``` ] --- ### Setting up a tuning grid .scroll-output[ ```r xg_grid <- grid_latin_hypercube( tree_depth(), min_n(), loss_reduction(), sample_size = sample_prop(), finalize(mtry(), dt_train), learn_rate(), size = 10 ) xg_grid ``` ``` ## # A tibble: 10 × 6 ## tree_depth min_n loss_reduction sample_size mtry learn_rate ## <int> <int> <dbl> <dbl> <int> <dbl> ## 1 10 26 3.64e- 4 0.811 10 2.13e- 2 ## 2 9 29 1.40e- 8 0.202 5 3.03e- 3 ## 3 4 9 1.89e- 3 0.835 10 7.75e- 9 ## 4 7 23 3.35e-10 0.930 6 8.11e-10 ## 5 11 3 2.39e- 8 0.562 2 2.22e- 7 ## 6 2 21 1.16e- 1 0.106 7 3.45e-10 ## 7 4 16 5.24e- 1 0.282 4 4.81e- 5 ## 8 14 13 2.32e+ 0 0.665 8 1.42e- 3 ## 9 13 36 2.02e- 6 0.535 8 1.23e- 5 ## 10 6 37 4.58e- 6 0.432 1 2.80e- 6 ``` ] --- ### Tuning the model .scroll-output[ This is the most time-intensive part with large amounts of data, so we're gonna use parallelisation here (using more of your CPU's cores). Make sure to edit this code according to your needs. ```r start_time = Sys.time() unregister_dopar <- function() { env <- foreach:::.foreachGlobals rm(list=ls(name=env), pos=env) } cl <- makePSOCKcluster(6) registerDoParallel(cl) xg_tune <- tune_grid( object = xg_wflow, resamples = folds, grid = xg_grid, control = control_grid(save_pred = TRUE) ) stopCluster(cl) unregister_dopar() end_time = Sys.time() end_time - start_time ``` ``` ## Time difference of 1.136629 mins ``` ] --- ### Looking at tuning results .scroll-output[ ```r xg_tune %>% show_best("rsq") %>% glimpse() ``` ``` ## Rows: 5 ## Columns: 12 ## $ mtry <int> 2, 9, 8, 10, 6 ## $ min_n <int> 7, 3, 24, 34, 13 ## $ tree_depth <int> 7, 11, 12, 6, 14 ## $ learn_rate <dbl> 1.545806e-02, 1.194447e-07, 3.635109e-05, 4.436419e-06,… ## $ loss_reduction <dbl> 1.125698e-09, 9.118926e-05, 1.658851e-09, 1.123321e+01,… ## $ sample_size <dbl> 0.7507816, 0.6794007, 0.9374669, 0.4770123, 0.2252345 ## $ .metric <chr> "rsq", "rsq", "rsq", "rsq", "rsq" ## $ .estimator <chr> "standard", "standard", "standard", "standard", "standa… ## $ mean <dbl> 0.8665343, 0.8370460, 0.8226847, 0.8031926, 0.7912635 ## $ n <int> 5, 5, 5, 5, 5 ## $ std_err <dbl> 0.010287916, 0.004288253, 0.004602997, 0.004568200, 0.0… ## $ .config <chr> "Preprocessor1_Model01", "Preprocessor1_Model03", "Prep… ``` ] --- ### Selecting the best model configuration .scroll-output[ ```r xg_final_wflow <- xg_wflow %>% finalize_workflow(select_best(xg_tune, metric = "rsq")) xg_final_wflow ``` ``` ## ══ Workflow ════════════════════════════════════════════════════════════════════ ## Preprocessor: Recipe ## Model: boost_tree() ## ## ── Preprocessor ──────────────────────────────────────────────────────────────── ## 8 Recipe Steps ## ## • step_rm() ## • step_impute_median() ## • step_impute_mode() ## • step_other() ## • step_mutate() ## • step_normalize() ## • step_novel() ## • step_dummy() ## ## ── Model ─────────────────────────────────────────────────────────────────────── ## Boosted Tree Model Specification (regression) ## ## Main Arguments: ## mtry = 2 ## trees = 750 ## min_n = 7 ## tree_depth = 7 ## learn_rate = 0.0154580616226708 ## loss_reduction = 1.12569835788786e-09 ## sample_size = 0.750781629404519 ## ## Engine-Specific Arguments: ## importance = impurity ## ## Computational engine: xgboost ``` ] --- ### Fitting the final model on the initial split (entire training data) ```r xg_final_fit <- xg_final_wflow %>% last_fit(dt_split) xg_final_fit ``` ``` ## # Resampling results ## # Manual resampling ## # A tibble: 1 × 6 ## splits id .metrics .notes .predictions .workflow ## <list> <chr> <list> <list> <list> <list> ## 1 <split [8198/2734]> train/test split <tibble> <tibble> <tibble> <workflow> ``` Let's inspect what's in there. --- ### Inspecting our final fit ```r xg_final_fit %>% collect_metrics() ``` ``` ## # A tibble: 2 × 4 ## .metric .estimator .estimate .config ## <chr> <chr> <dbl> <chr> ## 1 rmse standard 44.1 Preprocessor1_Model1 ## 2 rsq standard 0.890 Preprocessor1_Model1 ``` --- ### Inspecting our final fit ```r xg_final_fit %>% collect_predictions() ``` ``` ## # A tibble: 2,734 × 5 ## id .pred .row weight_kg .config ## <chr> <dbl> <int> <dbl> <chr> ## 1 train/test split 367. 1 453. Preprocessor1_Model1 ## 2 train/test split 345. 6 453. Preprocessor1_Model1 ## 3 train/test split 379. 18 449. Preprocessor1_Model1 ## 4 train/test split 371. 22 447. Preprocessor1_Model1 ## 5 train/test split 400. 26 445. Preprocessor1_Model1 ## 6 train/test split 393. 30 444. Preprocessor1_Model1 ## 7 train/test split 385. 31 444. Preprocessor1_Model1 ## 8 train/test split 372. 33 442. Preprocessor1_Model1 ## 9 train/test split 382. 40 439. Preprocessor1_Model1 ## 10 train/test split 389. 41 438. Preprocessor1_Model1 ## # … with 2,724 more rows ``` With this, we can make a useful plot. --- ### Inspecting our final fit .scroll-output[ ```r xg_final_fit %>% collect_predictions() %>% ggplot(aes(weight_kg, .pred)) + geom_point(alpha = 0.5, colour = "midnightblue") + geom_abline(lty = "dashed", colour = "grey50", size = 0.75) + labs(title = "Predictions vs. Actuals of Holdout") ``` <img src="Intro-to-Tidymodels_files/figure-html/unnamed-chunk-59-1.png" width="100%" /> ] --- ### Variable Importance Plot .scroll-output[ ```r xg_final_fit %>% extract_workflow() %>% extract_fit_parsnip() %>% vi() %>% slice_max(order_by = Importance, n = 7) %>% ggplot(aes(Importance, reorder(Variable, Importance))) + geom_col(fill = "midnightblue", colour = "white") + labs(title = "Variable Importance", subtitle = "Only the seven most important predictors are shown.", y = "Predictor", x = "Relative Variable Importance") + theme_bw() + theme(plot.title = element_text(face = "bold", size = 12), plot.subtitle = element_text(face = "italic", colour = "grey50")) ``` <img src="Intro-to-Tidymodels_files/figure-html/unnamed-chunk-60-1.png" width="100%" /> ] --- ### Making predictions on new data .scroll-output[ ```r one_pumpkin <- dt_test %>% select(-weight_kg) %>% sample_n(1) one_pumpkin %>% glimpse() ``` ``` ## Rows: 1 ## Columns: 10 ## $ year <fct> 2016 ## $ type <fct> Giant Pumpkin ## $ place <dbl> 1188 ## $ grower_name <fct> "Marin, Courant" ## $ city <fct> NA ## $ state_prov <fct> Quebec ## $ country <fct> Canada ## $ gpc_site <fct> Potirothon de Gentilly ## $ ott <dbl> 657.86 ## $ est_weight <dbl> 176.8707 ``` ] --- ### Making predictions on new data Feeding new data (in this case from the holdout) into the model enables you to make predictions. All recipe steps are applied and the workflow equally executed. ```r xg_final_fit %>% extract_workflow() %>% predict(one_pumpkin) ``` ``` ## # A tibble: 1 × 1 ## .pred ## <dbl> ## 1 180. ``` --- ### Making predictions on new data .scroll-output[ If you want to keep the characteristics of the new observation you made a prediction on, use `augment()` instead of `predict()`. ```r xg_final_fit %>% extract_workflow() %>% augment(one_pumpkin) %>% glimpse() ``` ``` ## Rows: 1 ## Columns: 11 ## $ year <fct> 2016 ## $ type <fct> Giant Pumpkin ## $ place <dbl> 1188 ## $ grower_name <fct> "Marin, Courant" ## $ city <fct> NA ## $ state_prov <fct> Quebec ## $ country <fct> Canada ## $ gpc_site <fct> Potirothon de Gentilly ## $ ott <dbl> 657.86 ## $ est_weight <dbl> 176.8707 ## $ .pred <dbl> 180.2735 ``` ] --- ### That's all! Of course you can fit a plethora of different models. These are just slightly different, the `tidymodels` framework stays the same, that's the superpower! For instance, here is the specification for an elastic net: ```r en_spec <- linear_reg(penalty = tune(), mixture = tune()) %>% set_engine("glmnet") en_wflow <- workflow() %>% add_recipe(en_rec) %>% add_model(en_spec) en_grid <- grid_latin_hypercube( penalty(), mixture(), size = 100 ) ``` --- # That's all! We hope this tutorial was useful! Feel free to make use of the code and don't despair if you encounter errors, that's totally normal. For further questions, feel free to reach out to us. Make sure to stay updated on our socials and via our website where all resources and dates are also published. <br> .center[ <img src="GraphicsSlides/Logo RUG hell.png" width="60%" /> **[Website](https://rusergroup-sg.ch/) | [Instagram](https://www.instagram.com/rusergroupstgallen/?hl=en) | [Twitter](https://twitter.com/rusergroupsg)** ] --- class: middle, inverse, hide-logo # Thank you for attending! <em style="color:#404040">The material provided in this presentation including any information, tools, features, content and any images incorporated in the presentation, is solely for your lawful, personal, private use. You may not modify, republish, or post anything you obtain from this presentation, including anything you download from our website, unless you first obtain our written consent. You may not engage in systematic retrieval of data or other content from this website. We request that you not create any kind of hyperlink from any other site to ours unless you first obtain our written permission.</em>